This is a paper by David Woods and Robert Hoffman. They go over the different rules, bounds, and cognitive laws that govern cognitive systems. The title of the paper of comes from the work of Herbert Simon whose work showed only “one slice” through the trade-offs that are a part of all of these sorts of systems.

This means these are things your going to want to know and understand when creating SRE programs and internal tools or developer experience teams.

By understanding the bounds that govern these systems, you can work with them and prepare for the pitfalls as approach the boundaries.

Just by having that team though, you’re already at risk for one of the issues. If you have a separate team that does this stuff, then you are making sure that the designers are at least a little bit distant from the people whose problems you are going to be solving.

One way of minimizing this gap is to have your team involved with the same problems. This is easier and can work well when the problems are things like deploying and releasing, though even here there is a danger that the seem can solve the problem for their way of working and not consider other ways.

Another way of off setting this risk is to shadow or embed with teams to better understand the ways they work and the constraints they face. For more on this sort of thing, check out the verbal reports issue

When working with systems, “there is no cognitive vacuum,” people always develop mental models when working with technology.

This means it is up to system developers and designers, (probably you), to create systems in such a way that the models people develop are accurate (to some degree) and useful.

The difficulty here is that as system createors we are often far away from the people we are designing for. This gap means that there is lots of room for misunderstanding to creep in.

People will fill the gaps between what the way the tools and process was designed and the work that they need to do. This is good as it is source of resilience, but it can also become a problem. If that gap is very large, then you’re asking them to devote a large amount of time and effort to filling that gap so that they can get things done. Also, as they get better and more practiced at this things will begin to look relatively easy from some perspectives. In order to see where your team can help will require multiple perspectives.

This is the “Law of Fluency.” We’ll examine a few more of the boundaries which will show us the trade-offs and “laws” that are a core part of cognitive work in sociotechnical systems, which is exactly the sort of system we work in. You can read more about laws around cognitive work here.

Another approach to gaining those perspectives is using and embedded rotation or tour system combined with interview skills. If you want to know more about interview skills, you can watch my talk or read more. But as we’ll see, there are trade-offs that must be made here.

Everything is a trade-off, that includes, creating, designing, and maintaining systems. There are five fundamental trade-offs, from these we can see five families of laws. If you want to read more about these families of laws you can read my analysis.

Bounded Ecology

There are always gaps between a system and its environment, they’ll never match perfectly. Also, the environment is constantly changing.

Adapting takes effort and resources. It can be easier or harder at different times, during different events. If you spend resources to protect against one or some particular failure modes, you’ll be more vulnerable in others. Anticipating surprise events takes resources as well.

Whether we can or do make that investment and to what degree and in what places leads to the “Optimality-Resilience of Adaptive Capacity Trade-off”. Or if you want to phrase it in forms of the negative, the “optimality-fragility trade-off”.

Bounded Cognizance

“There is always ’effort after meaning’.

Effort after meaning is a term from psychology, it is the continued effort that it takes to understand new information and to put it into practice.

Though there’s effort involved, both to gain and use information, the level of effort can very at different times.

This leads to the Efficiency-Thoroughness of Situated Plans Trade-Off.

Efficient plans are the usual plans, those often used, like standard operating procedures. While they are efficient, they can we difficult to apply as the situation changes from what was imagined or predicted when the plan was made.

This includes runbooks and is why they can be so difficult to work with. Since the runbook represents what was planned or anticipated at the time, it’ll can differ wildly from the circumstances in which it is needed.

When this is overlooked you can find teams or orgs trapped in a spiral of have an incident, push to update the runbooks, just to find that that does not save the day in the next incident.

If the org recognizes this spiral, they can break out at this point, but if they don’t, they’ll likely continue another (or several) iterations, constantly pushing for the runbooks to be made just that much better in a fruitless hope that someday they’ll reach some imagined perfect point. Since we know that there is “bounded ecology,” the perfect match will never happen. We have to say its good enough at some point.

On the other hand thorough plans are larger and account for more, but can be harder to execute or change once they are underway.

The reason that they say “bounded cognizance” instead of Simon’s “bounded rationality,” because it could be a program or “agent” as a model of cognition, not just a human as Simon originally talked about.

Bounded Perspectives

“Every perspective both reveals and hides certain aspects of the scene of interest”

This is similar to the ways of knowing I discussed in the Investigating and contrasting ways of knowing issue.

Systems can shift perspectives, but outside of a limited band it is expensive. There will always be some time, effort, or other resource cost to gaining and integrating new perspectives.

This leads us to the Revelation-Reflection on Perspectives Trade-off. Adding perspectives adds to what is seen, but it takes effort to integrate that perspective.

Bounded Responsibility

“all systems are simultaneously cooperative over shared goals and potentially competitive when goals conflict”

Chronic goals tend to be sacrificed in favor of acute goals. This can lead to an inability to notice sources of risk and brittleness.

This leads us to the Acute-Chronic Goal Responsibility Trade-off.

Effort should be expended to help ensure reciprocity, or else different groups will be prone to working at cross purposes when facing goal conflicts.

Bounded Effectiveness

Systems cannot do everything nor know everything. This leads to trade-offs in how authority is distributed. Wider distribution can mean more effective action but a burden to keep groups synchronized.

Centralization requires less coordination, but can be less effective. It integrates fewer viewpoints and thus can see fewer solutions.

Distribution increases the ability to recognize and consider interdependencies, whereas centralization does the opposite.

“change directed only at one part within the system often inadvertently triggers deleterious effects of other aspects of the system that cancel out our outweigh the intended benefits”

This leads us to the Concentrated-Distributed Action Trade-off. There is a trade-off between centralizing authority and decision making power or distributing it. These are the two extremes offense the continuum, not just a binary yes/no choice. The question is not “do I distribute authority or not?” that’s hopefully and easy yes that you do.

The questions here are:

- How much authority to distribute?

- To where? (what roles or levels?)

- Under what conditions?

As with the other trade-offs this one is dynamic, it will likely change over time and in different situations, especially when making sacrifice decisions and safing actions.

Unlike the other trade-offs though, this one is the most apparent in most organizations and also the most explicitly addressed. At the very least some of this tend to be addressed by incident response frameworks and plans.

In truth though, there is still some ambiguity here. The plans and frameworks aren’t going to specify every single permitted action (though some may try). Additionally people are going to perceive different levels of autonomy available to them.

If you’ve been a reader for a while, you know that I advise distributing descission making authority to those who are doing the work and have expertise. (If you want personalized incident response advice, reach out to me, I have a small number of slots available).

If you distribute authority entirely through an organization and provide no centralized structure around which cordination and synchronization can happen, then you’ll have fragmentation. It will happen to some degree through out the trade space in any case though.

Putting it all together

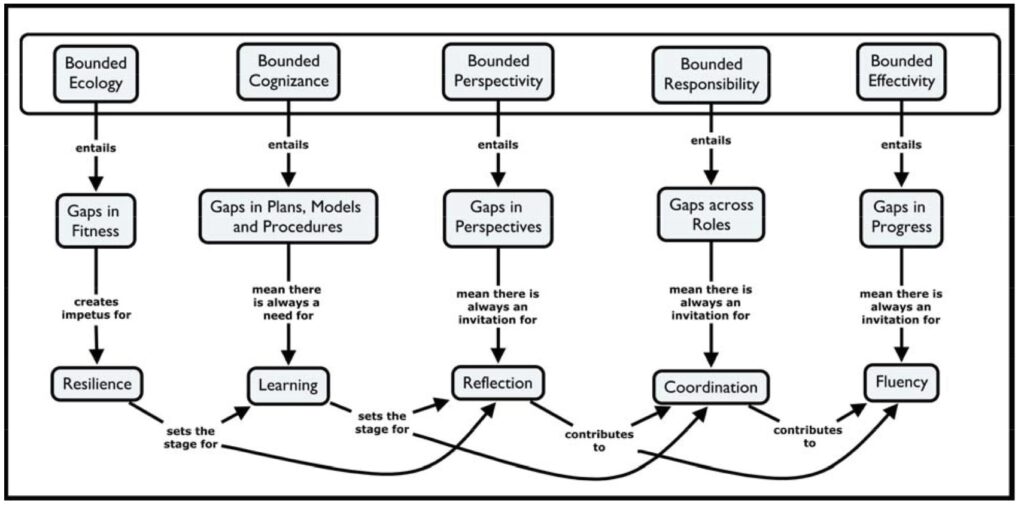

An earlier version of this work that the authors presented at the NDM converence that contained a diagram that helps show how this all fits together:

Takeaways

- When working with cognitive systems, like those in incident response and SRE, there are always trade-offs. Understanding these trade-offs and bounds can help create better, more effective systems.

- When looking at the laws of cognitive systems, we can see that many of them have to do with the limits on those systems.

- The five trade-offs are:

- Optimality-Resilience of Adaptive Capacity Trade-off

- How much is invested in anticipating surprise? How much in guarding against specific failure modes that can leave the system vulnerable to other problems?

- Efficiency-Thoroughness of Situated Plans Trade-off

- Are the usual plans used that may be hard to fit to the situation at hand or a more thorough plan that can be harder to get going and change?

- Revelation-Reflection on Perspectives Trade-off

- How much is invested in integrating new perspectives so that more can be seen and understood?

- Acute-Chronic Goal Responsibility Trade-off

- Long term or chronic goals tend to be sacrificed in favor or short term or acute goals, where is that trade made?

- Concentrated-Distributed Action Trade-off

- How much decision making authority is centralized? How much is distributed?

- Optimality-Resilience of Adaptive Capacity Trade-off

- When working with systems, “there is no cognitive vacuum,” people always develop mental models when working with technology.

- The law of fluency tells us that people adapt and fill gaps when doing work. This adaptation is a source of resilience, but can make it difficult to see the adaptation itself.

- When people adapt they make it look easy, so it can be difficult to see where you might be able to intervene or assist. You need multiple perspectives in order to over come this.

Subscribe to Resilience Roundup

Subscribe to the newsletter.